The Fundamentals of Gaze Tracking

Discover the impact of gaze tracking technology on human behavior. Explore applications and insights in this journey with Codemonk.

Have you ever strolled through a shopping mall or browsed an online store and found yourself drawn to certain products? Ever wondered why some items seem to catch your eye more than others? It's no accident. Behind the scenes, there's a sophisticated system of attention catching at work.

Think about it – why do certain products sit prominently on shelves, or why do ads on TV seem to grab your attention so effectively? It's all about strategically capturing your attention. Whether you're in a physical store or browsing online, designers carefully arrange products and advertisements to grab your attention.

Attention is a powerful tool that can reveal a lot about human behaviour. Think of those personality tests, where you're asked to describe what you see in an image to get a glimpse of your mind. By analysing where your gaze lingers, experts can paint a picture of your personality and preferences. Also based on this data many cognitive studies have been conducted which are used in medical tests.

In this blog, we'll delve into the world of gaze tracking technology – exploring how it works, its applications in everyday life, and the insights it can unlock about human behaviour. So, let's embark on this journey together and uncover the secrets behind where your eyes wander.

Significance of gaze

The significance of understanding where our gaze falls cannot be overstated. The implications are vast and varied, spanning across industries such as e-commerce, graphic design, healthcare, sports, gaming, and more.

Think about it – our eyes are constantly drawn to points of interest, whether it's a captivating image, a crucial piece of information, or a compelling advertisement. By harnessing the power of gaze tracking technology, businesses can gain invaluable insights into user behaviour and preferences.

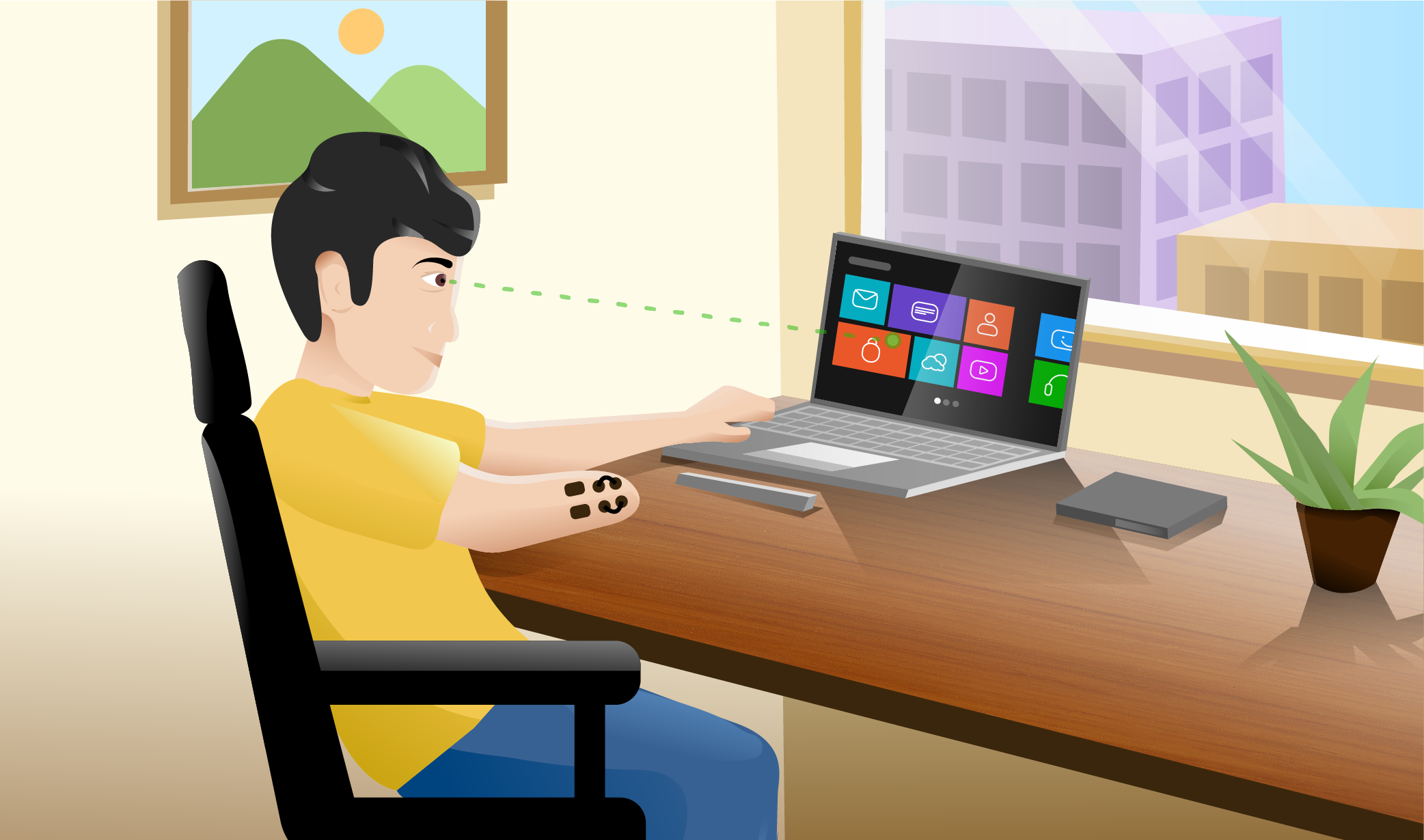

Imagine using your laptop, mobile phone, or tablet, and effortlessly controlling the cursor with just your gaze. It's not science fiction – it's the future of user interaction. With advancements in AI algorithms, we're on the brink of a revolution in gaze tracking technology.

By understanding where users are looking on their screens, developers can create more intuitive interfaces and personalised experiences. From enhancing accessibility for individuals with disabilities to revolutionising how we play games or shop online, the possibilities are endless.

So, let's delve deeper into the fascinating world of gaze tracking and explore how this technology is reshaping the way we interact with digital devices.

What is Gaze tracking?

Gaze tracking is a process of identifying where a person is looking on the screen. Using artificial intelligence based on data, we can predict the gaze direction and screen point where the user is looking at (i.e. mapping from real world to pixel world!).

But, how does AI predict your gaze?

The two main underpinnings for understanding and modelling the gaze are physics, and maths. The change in Gaze on screen can be determined by the movement of eyeball.

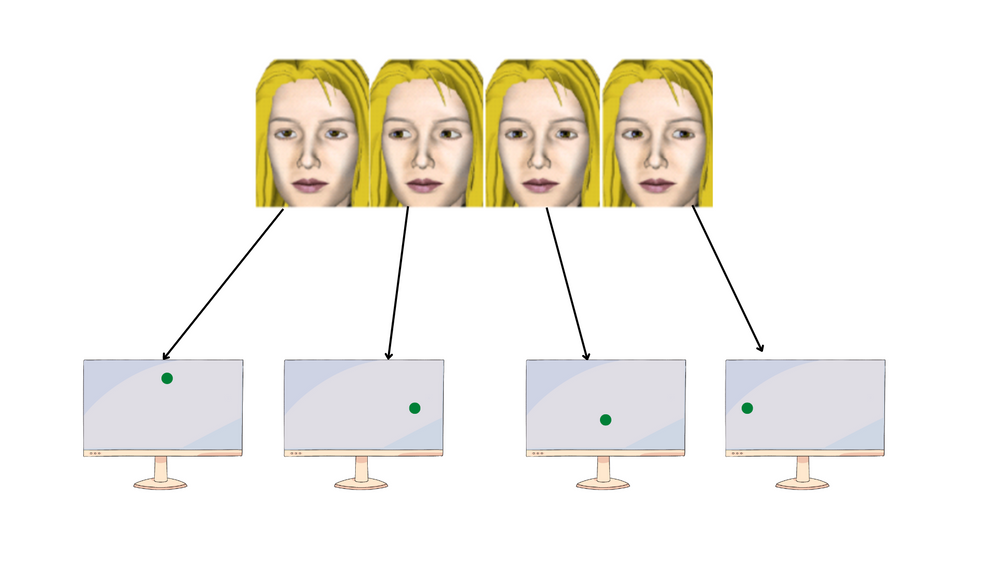

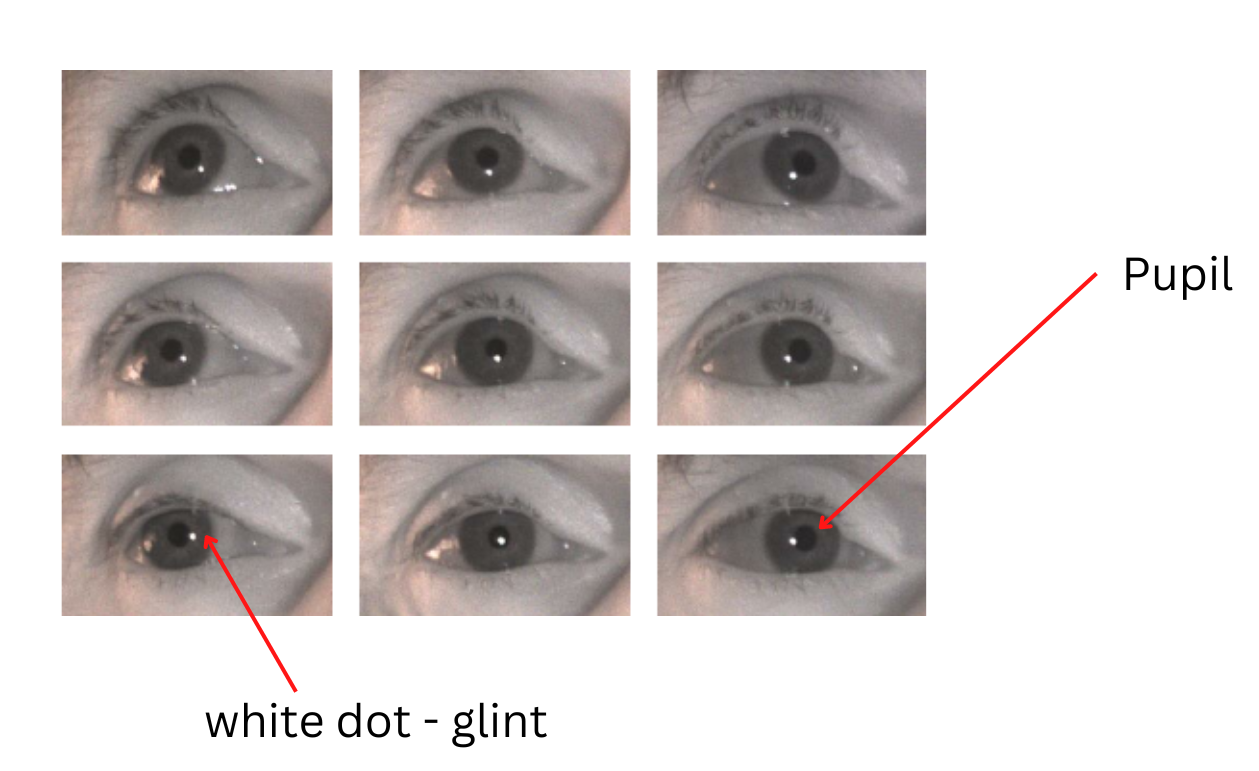

The main input feed for the AI algorithm in gaze tracking is your face image captured by a camera (web camera). The important role as said before is played by your eyes. Hence eye regions are cropped off from the captured image. You can see the measurable difference between these images when a person’s gaze changes as shown above.

Researchers have found multiple methods and ways to estimate gaze location. Using features of your eyes, an AI model can predict the location of gaze on the screen. Gaze can be determined using these 2 setups -

- Using a specialised eye-wear, an infrared camera and other visual sensors.

- Using only a Mobile or laptop and its camera.

Before moving into these methods and understanding them, let’s take a look at some of the terminologies used in gaze tracking. These would help us in understanding the concepts better.

Terminologies used in Gaze Tracking

-

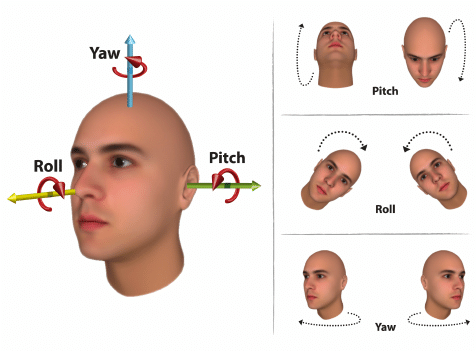

Head pose/orientation: Position or orientation of your head while holding a mobile, when sitting in front of a desktop/laptop, or while wearing an eye-wear.

-

Head Pitch: Pitch is the angle in the z-y plane with the horizontal axis at the origin.

-

Head Yaw: Yaw is the angle in the x-z plane with the vertical axis at the origin.

-

Head Roll: Roll is the angle in the x-y plane with the third axis(z) at the origin.

Fig - head pitch, yaw, and roll (Source)

-

Gaze vector: It represents gaze direction with values from the user realm (object coordinate axis).

-

Gaze location: It represents a point on the screen in the screen realm(screen coordinate system).

-

Error in degrees: It is the shift between the actual gaze vector and the predicted gaze vector.

-

Error in pixels/cm: It is the shift between the actual gaze location and the predicted gaze location.

-

Fixations: These are phases when the eyes are stationary at some point on the screen. Duration of concentration of gaze on a particular location of the screen.

-

Saccades: These are rapid and involuntary eye movements that occur between fixations. Measurable saccade-related parameters include saccade number, amplitude, and fixation-saccade ratio.

-

Scan-path: This includes a series of short fixations and saccades alternating before the eyes reach a target location on the screen. Movement measures derived from scanpath include scanpath direction, duration, length, and area covered.

-

Gaze duration: It refers to the sum of all fixations made in an area of interest on screen before the eyes leave that area and also the proportion of time spent in each area.

-

Pupil size and blink: Pupil size and blink rate are measures used to study cognitive workload.

Having seen these terminologies now we will see different approaches and methods used for gaze tracking.

Overview of General Techniques

-

2D Regression

- Regression is an AI/machine learning algorithm that maps input data (numerical or categorical values) to some output (continuous numerical values). It uses some polynomial equations for mapping functions.

- A least squares regression is then used to determine the polynomials such that the differences between the reported PoGs (point of gaze on the monitor) and the actual PoGs are minimized. The regressions are done separately for the left and right eyes and an interpolated PoG is calculated as the average of the (X left-eye, Y left-eye) and (X right-eye, Y right-eye) coordinates of a location on the screen. Here you can explore more on the mathematics of this method. Also, head movement matters, in such estimation one research paper has given an explanation and solution to it via the SVR (support vector regression) method.

- Here centers of an eye ( iris ) are important parameters with a polynomial equation derived after experimentation. In such methods, the vector between the pupil center and corneal glint is mapped to corresponding gaze coordinates on the device screen using a polynomial transformation function. According to Hennessey et al. (2008), the polynomial order may vary but is mostly of the first order:

X = a0 + a1x + a2y + a3xy

Y = b0 + b1x + b2y + b3xy

Fig - Pupil and the corneal glint in eyes (reference)

-

3D modeling method

-

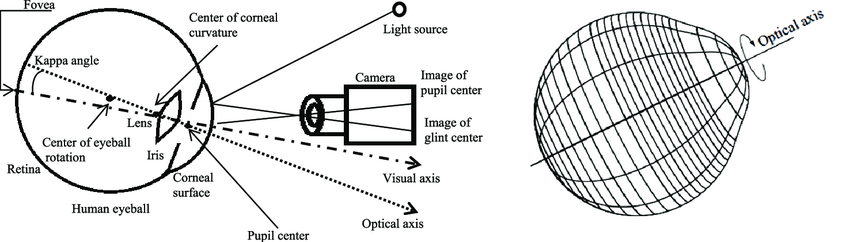

This method uses a geometrical model of the human eye with the center of the cornea, optical and visual axes of the eye, which are used to estimate the gaze as points of intersection where the visual axes meet the screen.

-

The optical axis is shown as the line joining the center of curvature of the cornea with the pupil center. The visual axis passes through the fovea and the center of corneal curvature. The Kappa angle is the angular deviation between the optical and visual axis

-

Using information like pupil center, glint reflection, and courtier points the optical axis is obtained, and then estimates of the visual axis using kappa angle which is generally 5 degrees. For precise measurements, user calibration (studying unique user) is done.

-

In the user calibration process, a few target locations are shown on the screen and the user looks at them, in the back-end gaze estimation happens with all measurements of above said terms. This helps in the estimation of the gaze, which is personalized to the user.

-

Here 3D eye model is used for the estimation of gaze, where cameras are required as well as infrared light is used. Accuracy is said to be highest in such methods but requires a complex setup for making it work.

Fig - 3D model of the human eye (source)

-

-

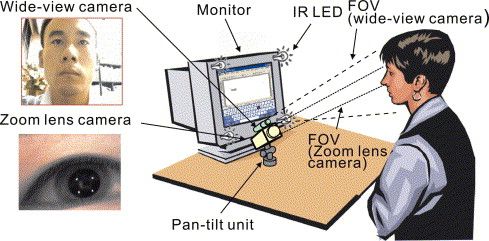

Cross-ratio based method

- Here the known rectangular pattern of NIR lights is put on the eye of the user and the gaze position is estimated.

Fig - Cross-ratio-based method setup (reference)

- Four LED’s are mounted on four corners of a computer screen and are used to produce glints(reflections) on the surface of the cornea. The gaze location is estimated from the glint positions, the pupil, and the monitor screen's size.

- The projections made gives virtual images of the corneal reflections of the LED’s (scene plane). Also, the camera and light source share the same position hence it creates a bright pupil area. These 2 things are used to map the gaze location. This mapping is done using polynomial-based regression or Gaussian based regression. Then the projection of the PoG (point of Gaze) on the scene plane to the image of the pupil center on the camera plane can be estimated.

- Here the known rectangular pattern of NIR lights is put on the eye of the user and the gaze position is estimated.

-

Appearance-based method

- In appearance-based methods, the information from the eye region is used to build AI model which estimates gaze location.

- This information can contain eye patch images, eye corner values, head pose values, etc.

- It uses a convolution-based deep learning model to predict gaze vectors or gaze points using this set of information as input feed.

| Evaluation Criteria | 3D Model Method | Regression Method | Cross Ratio Method | Appearance-based Method |

|---|---|---|---|---|

| Setup Complexity | High | Medium | Medium | Low |

| System Calibration | Fully-Calibrated | Optional | Optional | Optional |

| Hardware Requirements (cameras) | 2 + infrared (stereo) | 1 + infrared | 1 + infrared | 1 + Ordinary |

| Gaze Estimation Error | < 1 degree | ~ 1-2 degree | ~1-2 degree | > 2 degree |

| Implicit Robustness to Head Movements | medium to high | low to medium | low to medium | low |

| Robustness to varying illumination | medium to high | medium to high | medium to high | low |

| Robustness to use of eyewear | low | low | low | medium |

Effects of Physical Environment

In the above methods, we have seen how different input feeds are used to track the gaze. Features like eye patches, corneal glint reflection images, eye corners, pupil centre values, full face feature values, etc. are used in the input feed for these methods. But, what if, e.g., your eyes are not detected, images are blurry, and image features are of lower quality? This will interrupt the application and we may get the wrong results for those instances. Hence, we need to ensure some physical conditions which won’t cause problems to our system’s operations. The following are some of the conditions.

- If a room or area is darkened then at such times images of your face would not be clear and so the feature values. This can give wrong results. Hence although the system is made robust, enough light is required.

- If a person is wearing eyeglasses then there are possibilities of having screen reflection on eyeglass which can hinder the accuracy.

- A person’s head poses also matter because even if he/she may be looking at some point on screen but with a different head angle. this changes eyeballs and apparent gaze in images. Hence system must consider head orientation either in a dataset with a variety of samples or in model architecture.

So, the system must be made considering such situations and other physical scenarios around us. These are the recommendations given to have a smooth experience.

Applications of Gaze Tracking

- Controlling Device Operations:

Gaze tracking can help handicapped students with digital or online exams and also will help them to study. With mere eyes, they can control the mouse cursor and even write using a virtual keyboard. This can give them freedom and independence so that they no more need to rely on a second person to write exams for them.

2. Shopping Malls:

As stated at the start of article, shopping malls use specific user patterns to arrange products. Using Gaze Tracking technology IKEA tested and studied its customers by understanding what actually the customer looks at and how the customer’s attention rolls over various items. After the customer has completed his shopping the collected data is used for further analysis which are useful for marketing strategies.

3. Gaming:

Similarly, taking another use case, with gaze tracking we can play PlayStation/desktop games. Here you won’t be using any joystick or mouse but, you can control and play the game with your eyes.

There are many applications that can be derived using gaze tracking. At the same time, although with AI we can create robust systems, there are cautions that need to be taken off while using such applications. As these systems are built on data, so need to ensure that input feeds are correct and valid in real time.

Conclusion

Gaze tracking systems offer various methods to determine a person's point of focus on a screen. The applications of gaze estimation are wide-ranging and have significant potential across different industries. The point of gaze in our daily lives provides valuable insights about individuals if observed closely. Leveraging gaze tracking technology can enhance our interactions with mobile devices, tablets, laptops, and desktops, streamlining their operations and management. Furthermore, gaze tracking can be applied effectively in eye-testing scenarios. Gaming experiences can be taken to the next level by incorporating gaze-operated controls. Gaze estimation, as a branch of artificial intelligence or deep learning, holds immense promise for the future.

Want more insights and expert tips? Don't just read about Gaze tracking! Work with brilliant engineers at Codemonk and experience it's effective use case firsthand!

References

- IKEA shopping mall example - IKEA shopping