Creating a RAG-based Blog Writer API with LangChain - Langflow

Discover how to build a RAG-based Blog Writer API using LangChain and Langflow. This guide covers creating a powerful content generation tool that combines retrieval and language models, enhancing accuracy and efficiency in generating high-quality blog posts.

Whenever the question, “Can AI replace humans?” or some variation of this thought experiment was put forth, we at Codemonk have always responded with, “AI should upskill people rather than replace them.” And this response has never been truer than the current day, where technological innovations provide humans with newer, more efficient pathways to solving problems. At Codemonk, technology serves as a helping hand that improves the products and services we build for our clients. Today, we would like to demonstrate one such helping hand (Retrieval-Augmented Generation (RAG)) that has enabled us to develop several utilitarian products for our clients.

Retrieval-Augmented Generation (RAG) adds an interesting flavor to the field of natural language processing and enterprise workflows, combining the power of large language models (LLMs) with the ability to retrieve and incorporate relevant information from external knowledge bases. This approach offers several key benefits for enterprises:

- Enhanced Accuracy: By grounding responses in retrieved information, RAG reduces hallucinations and improves the factual accuracy of generated content.

- Customization: RAG allows enterprises to leverage their own proprietary data and domain-specific knowledge, tailoring outputs to their unique needs.

- Cost-Efficiency: By reducing the need for constant fine-tuning of large models, RAG can significantly lower computational costs; this has been validated through a recently published Deepmind article that invalidates the need for extensive training of LLMs.

- Scalability: RAG systems can easily incorporate new information without requiring the retraining of the entire model.

- Transparency: The retrieval step in RAG provides a clear source for the information used in generation, enhancing explainability and trust.

These advantages make RAG an invaluable tool for simplifying and improving various enterprise workflows, from customer support to content creation and decision-making processes.

Now that we understand what RAG is, let's look at how we can utilize it (in the most user-friendly manner):

Think of RAG as an ocean of capabilities, and we are in need of a ship that helps us brave these oceans. With that intent, we will build our ship (LLM that solves our problems) using Langchain, which is an open-source framework designed to simplify the development of applications using large language models. It provides a set of tools and components that make it easier to build complex language model pipelines, including RAG systems. The next need of the hour would be the ship captain that guides our LLM (ship) through the most efficient route to solve the problem at hand. And we hire such a ship captain through Langflow, a graphical user interface (GUI) to help guide the LLM. It provides a drag-and-drop interface where users can connect different components to create powerful language model applications without writing extensive code.

Together, LangChain and LangFlow provide a powerful and accessible way to implement RAG systems. They allow developers to quickly prototype and deploy sophisticated language model applications that can retrieve information from various sources and use it to generate high-quality, contextually relevant outputs. All that is left is to drive the ship through the oceans toward uncharted territories.

Below is the detailed step-by-step guide to building such a ship, with a suitable captain to guide it. In technical terms, by following the below guide, you would be able to tap into a potent LLM, guide it using Langflow UI, and solve discrete enterprise use cases without any intensive development. For simpler understanding, let's try building a blog writer for generic news reporting across a specific industry. The intent of creating a blog writer is to help us curate the latest news pertaining to important events in society, helping humans retrieve important information easily. Bare in mind, this is purely a demonstration of Langchain using Langflow, not a production application that can be shipped or deployed.

Steps to build a blog writer

We begin with the assumption that anyone hoping to recreate this can install Langflow and Langchain on their computers. We will be following this article up with a detailed installation and usability guide soon. For now, let's continue with the below example.

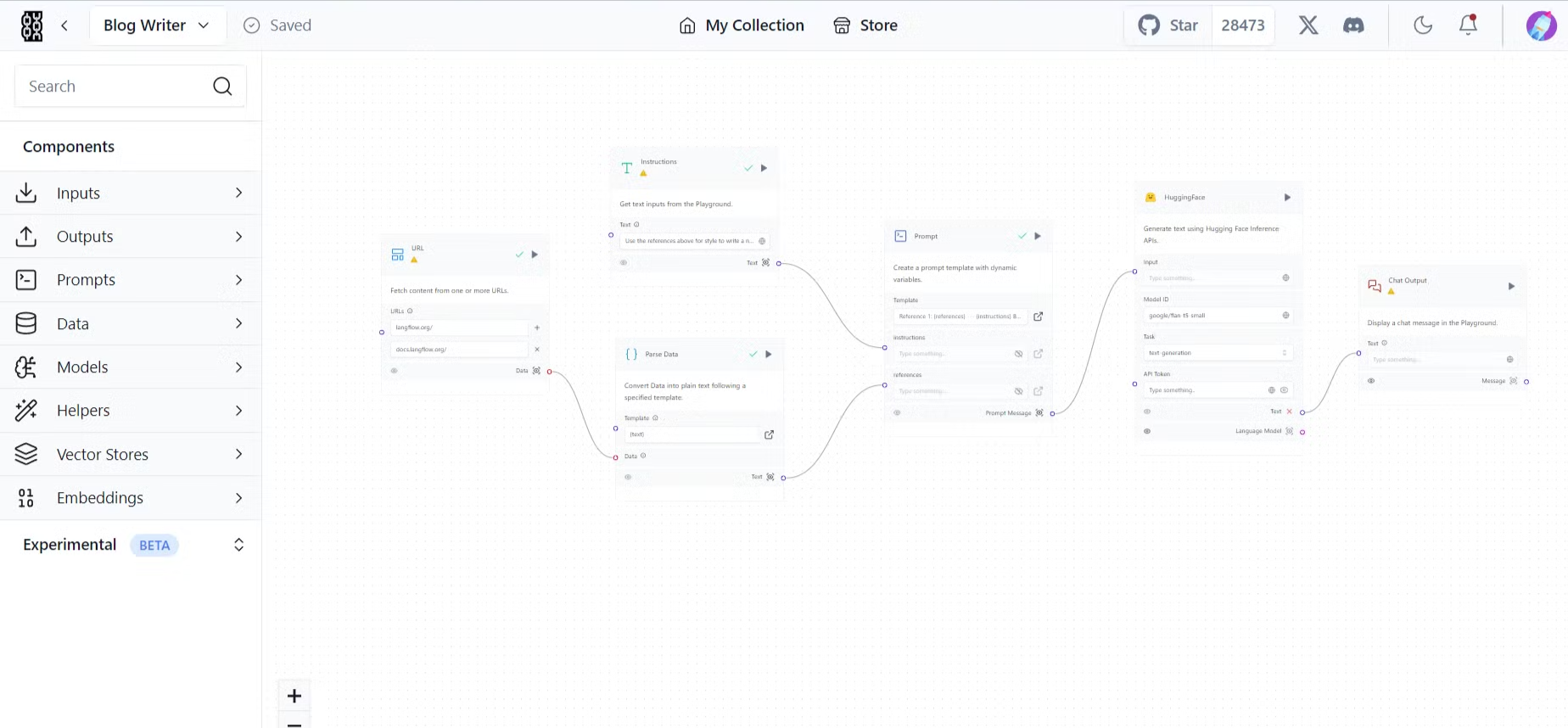

We have broken down the components of the langflow UI below, from a readability perspective, without following the development flow.

Step 1: Choosing an Open-Source LLM

For this example, we'll use the FLAN-T5 model, which is an open-source language model known for its versatility and good performance across various tasks. In LangFlow, you can use the "HuggingFaceHub" component to load this model.

Step 2: Setting Up the Document Loader

The first step in our RAG pipeline is to load the documents that will serve as our knowledge base. In LangFlow, you can use the "DirectoryLoader" component to load documents from a specified directory.

Step 3: Text Splitting

After loading the documents, we need to split them into smaller chunks for efficient processing. The "RecursiveCharacterTextSplitter" component in LangFlow can be used for this purpose.

Step 4: Creating Embeddings

Next, we need to convert our text chunks into numerical representations (embeddings) that can be efficiently searched. The "Hugging FaceEmbeddings" component can be used to create these embeddings.

Step 5: Setting Up the Vector Store

To store and efficiently search our embeddings, we'll use a vector store. The "Chroma" component in LangFlow provides an in-memory vector store that's suitable for our purposes.

Step 6: Creating the Retriever

The retriever is responsible for finding relevant information from our vector store based on the input query. We can use the "VectorStoreRetriever" component in LangFlow for this.

Step 7: Setting Up the Prompt Template

We need to create a prompt template that instructs the model on how to use the retrieved information to write a blog post. The "PromptTemplate" component in LangFlow allows us to define this template.

Step 8: Creating the RAG Chain

Finally, we'll combine all these components into a RAG chain using the "RetrievalQA" component. This chain will take a blog topic as input, retrieve relevant information, and generate a blog post.

Connecting the components

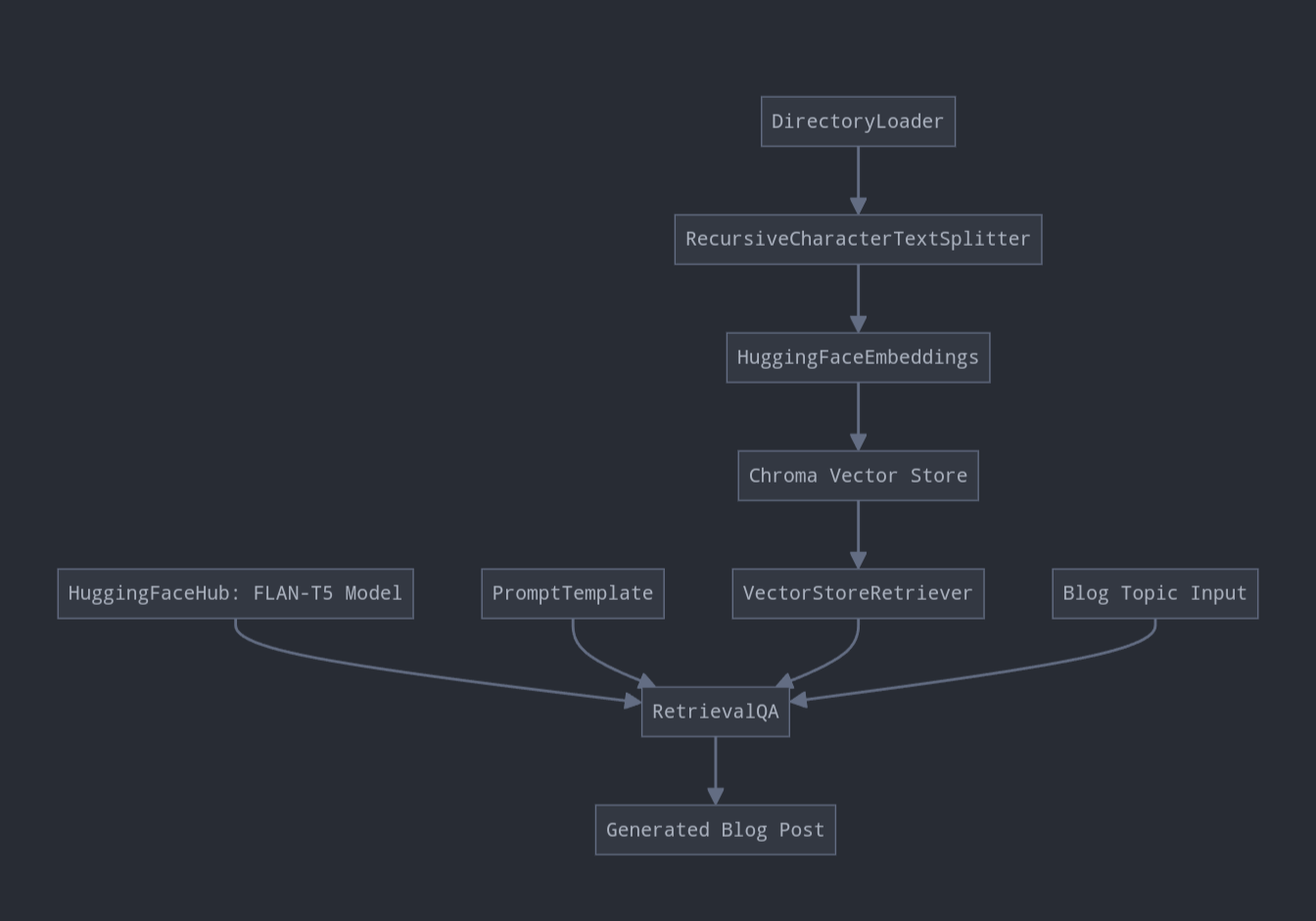

Here's how these components are interconnected in our LangFlow setup:

- The Directory Loader loads documents, which are then split by the RecursiveCharacterTextSplitter.

- The split texts are converted to embeddings by HuggingFaceEmbeddings and stored in the Chroma vector store.

- The VectorStoreRetriever is connected to the Chroma store to enable retrieval.

- The HuggingFaceHub (FLAN-T5 model), PromptTemplate, and VectorStoreRetriever are all connected to the RetrievalQA component.

- The RetrievalQA component outputs the final blog post.

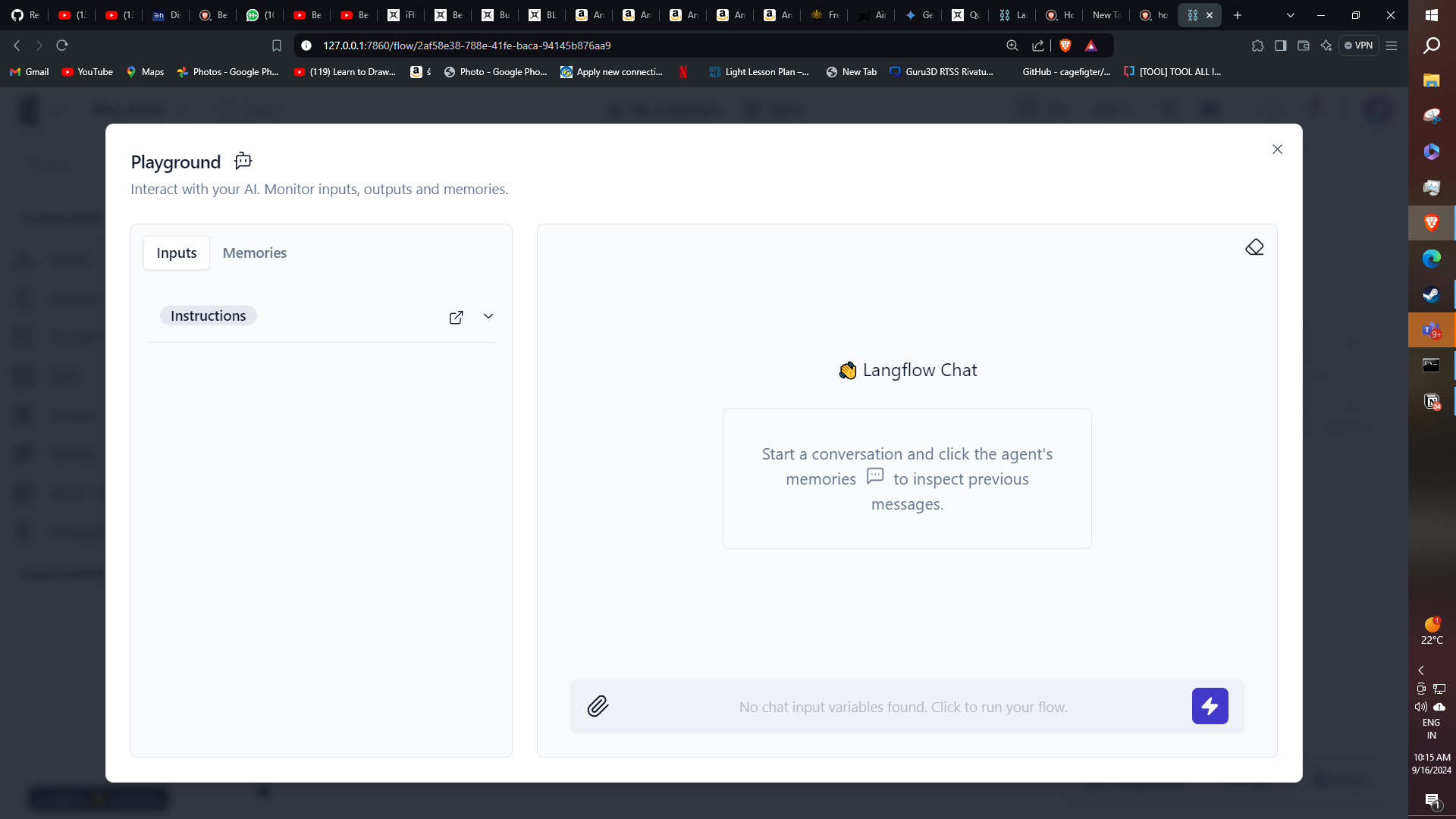

Once connected, load up the playground and see if the blog writer works.

Run the API, followed by a suitable input prompt, after loading up all of the relevant websites you would like the Blog Writer to read up.

Example 1: Technology Trends

Input: "Write a blog post about emerging technology trends in 2024."

Output:

Title: Emerging Technology Trends Shaping Our Future in 2024 As we navigate through 2024, several cutting-edge technologies are revolutionizing the way we live and work. Here are some of the most significant trends to watch: 1. Artificial Intelligence and Machine Learning: AI continues to advance rapidly, with applications in healthcare, finance, and autonomous vehicles becoming more sophisticated and widespread. 2. Quantum Computing: Major breakthroughs in quantum computing are bringing us closer to solving complex problems in cryptography, drug discovery, and climate modeling. 3. Extended Reality (XR): The lines between virtual and physical worlds are blurring with advancements in augmented, virtual, and mixed reality technologies. 4. Sustainable Tech: Green technologies are gaining momentum, with innovations in renewable energy, carbon capture, and eco-friendly materials leading the charge. 5. 6G Networks: While 5G is still being rolled out globally, research into 6G is already underway, promising even faster and more reliable connectivity. As these technologies continue to evolve, they will undoubtedly reshape industries and create new opportunities for innovation and growth.

Example 2: Health and Wellness

Input: "Create a blog post about the benefits of meditation for mental health."

Output:

Title: Unlocking Inner Peace: The Profound Benefits of Meditation for Mental Health In our fast-paced, stress-filled world, finding moments of calm can seem like a luxury. However, the ancient practice of meditation is proving to be a powerful tool for maintaining and improving mental health. Here's why incorporating meditation into your daily routine can be transformative: 1. Stress Reduction: Regular meditation has been shown to lower cortisol levels, helping to alleviate stress and promote relaxation. 2. Improved Focus: By training your mind to stay present, meditation enhances concentration and cognitive function. 3. Emotional Regulation: Meditation cultivates mindfulness, allowing you to observe your thoughts and emotions without judgment, leading to better emotional control. 4. Anxiety Management: Studies have found that consistent meditation practice can significantly reduce symptoms of anxiety disorders. 5. Enhanced Self-Awareness: Through introspection, meditation helps you gain a deeper understanding of yourself and your thought patterns. 6. Better Sleep: By calming the mind and body, meditation can improve sleep quality and help combat insomnia. 7. Increased Compassion: Certain meditation techniques, like loving-kindness meditation, can foster empathy and compassion towards oneself and others. Remember, meditation is a skill that improves with practice. Even a few minutes a day can lead to significant benefits for your mental health and overall well-being.

These examples demonstrate how our RAG-based blog writer can generate informative and coherent content on various topics by leveraging retrieved information and the capabilities of the language model.

Conclusion

Creating a RAG-based blog writer API using LangChain on LangFlow offers a powerful solution for generating high-quality, informative content. By combining the strengths of retrieval and generation, we can ensure that the produced blog posts are not only well-written but also grounded in accurate, up-to-date information. As enterprises continue to seek efficient ways to create content and streamline their workflows, RAG-based solutions like this will undoubtedly play an increasingly important role.

Through this showcase, Codemonk aims to do just that: show enterprises what they can develop (with some help, of course) and what they can achieve. We firmly believe that intelligent engineering makes humans better, and with that in mind, we continue to build products and services that better us.